Design research is how you figure out what users actually need before burning the budget on features they'll ignore.

It's the systematic investigation of user behavior, pain points, and expectations. Instead of building what stakeholders think is clever, design research tells you what real users will pay for, use daily, and recommend to others.

At Lazarev.agency, an AI product design agency, we've seen the pattern repeat: startups skip research, launch fast, then wonder why nobody gets their product. Enterprises rebuild the same features three times because they never talked to users. Design research methodology stops that cycle.

Key takeaways

- Match methods to problems. Interviews explain why, usability tests show where, and analytics confirm what.

- Test with real users. Only your target market gives valid insights.

- Small samples, big insights. 5 tests catch most issues; 30 interviews reveal patterns.

- Document what matters. Share findings, quotes, and actions — not 60-page reports.

- Research drives results. Grounded design fuels conversions, retention, and $500M+ in client wins like the top AI design agency Lazarev.agency did.

- Make it continuous. Research, design, test, refine — repeat.

Why does design research matter?

Most products don't fail because of bad engineering. They fail because teams don't understand user behavior in context. They guess at user expectations, add features nobody asked for, then blame "market timing" when adoption tanks. The design research process fixes this. It helps you:

- See what users actually do. Not what they say in a survey, but how they behave in their natural environment when nobody's watching. Observational research catches the friction they've normalized.

- Separate real problems from noise. Users will tell you they want more features. Research shows they need simpler workflows. Qualitative data reveals what actually blocks them from specific tasks.

- Kill bad ideas early. Better to learn your concept doesn't work in week two than month six. Usability testing and user interviews save time and budget by catching issues when fixes cost hours.

- Build products that convert. Design solutions grounded in research findings perform better. Period. Understanding user needs is how you create products that people choose over competitors.

Good design research turns vague mandates like "make it intuitive" into actionable design outcomes. It gives you a comprehensive understanding of the problem before anyone touches Figma.

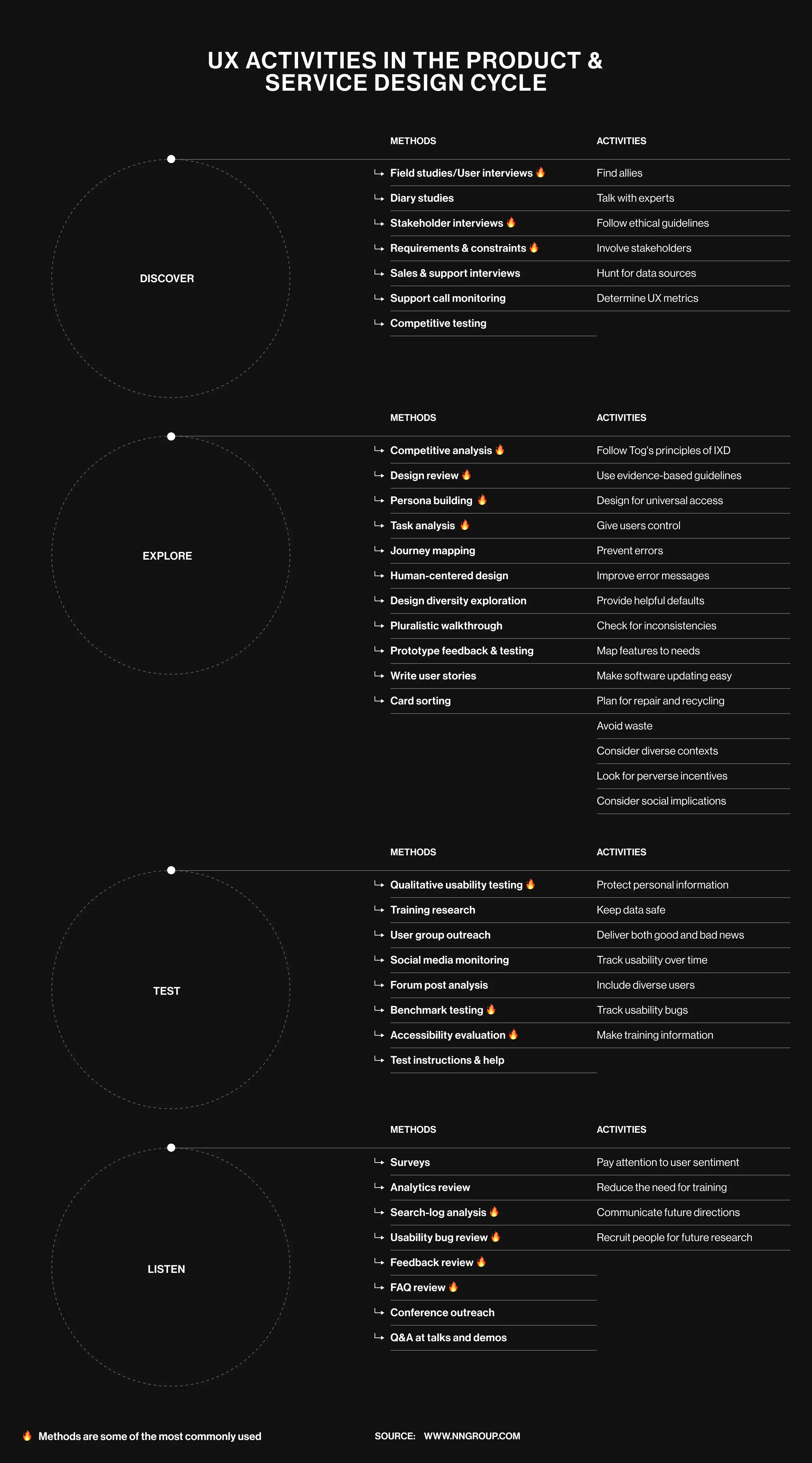

Design research methods

The research process isn't a template you copy-paste. Different aspects of your project need different methods.

Qualitative methods

- User interviews are structured conversations where you shut up and listen. You're not pitching, you're learning why users make decisions, where current solutions fail them, and what they'd pay to fix. This is where insights are generated that no analytics dashboard will ever show you.

- Contextual inquiry means watching users work in their actual environment. Instead of asking "How do you track expenses?" you sit with them while they do it and see where they pause, improvise, or give up. Real context reveals usage patterns surveys miss.

- Usability testing puts your design concepts in front of real users and asks them to complete tasks while you observe. Where do they hesitate? What makes them click back? What feels obvious? This method is non-negotiable before launch. We use it on every project at Lazarev.agency, your AI UX design partner

- Focus groups bring together different groups of users to discuss their experiences with your target market's problem space. They're useful for understanding diverse perspectives but less reliable for specific interaction design issues.

- Ethnographic studies involve spending extended time observing users, sometimes weeks or months. This longitudinal study approach uncovers behaviors users themselves don't consciously notice. It's intensive but reveals insights that rapid iteration can't.

- Card sorting helps you understand how users naturally categorize information. Hand them cards with features or content, ask them to group what belongs together. This shapes your information architecture and navigation before you build it wrong.

Quantitative methods

- Surveys and questionnaires collect data from larger samples. Good for spotting trends across different groups and validating patterns you found in interviews. Bad for understanding the "why" behind the numbers.

- Analytics and behavioral data show you what users do in your product: where they drop off, which features they ignore, how long specific tasks take. This quantitative data confirms or contradicts what users tell you in research.

- A/B testing compares design variations to see which performs better with real users. It's how you move from "we think this works" to "this increased conversions by 23%."

- Tree testing validates your information architecture by asking users to find specific items in a text-only structure. If they can't navigate a simple tree, your actual interface won't save them.

Hybrid approach

- Design thinking combines several methods across various stages, empathy research, ideation, prototyping, and testing in rapid iteration cycles. It's a structured approach that keeps user needs central while moving fast.

- Diary studies ask users to log their experiences over time, giving you longitudinal insights without constant observation. You see how behavior shifts across different contexts and situations.

- Desirability studies test emotional response to design concepts using word association and preference rankings. This helps ensure your visual direction resonates with your target audience emotionally, not just functionally.

🔑 The key: Use appropriate methods for your specific research questions. Don't run focus groups when you need usability testing. Don't survey when you need contextual inquiry. Match the method to the problem.

How to conduct design research

Design research is the backbone of creating products that people not only try but also keep using. It helps teams gather feedback, identify patterns, and translate insights into real design solutions. To show how this works in practice, let’s walk through the Dollet Wallet case.

Dollet Wallet is a Web3 finance app that helps users securely store, track, and manage digital assets. In a crowded crypto market full of overly complex tools, Dollet aimed to balance accessibility for first-time users with advanced functionality for experienced traders.

However, the app faced a challenge: it struggled to communicate its value to different user groups. This led to friction during onboarding and lower retention rates. To solve this, Dollet partnered with Lazarev.agency, an AI product design agency, to run global UX research with 1,600 participants. The goal was to redesign Dollet into a wallet that drives adoption, engagement, and long-term loyalty.

1. Define what you're solving

Start with specific research questions, not vague goals. "Understand users" isn't a plan. "Identify why enterprise users abandon onboarding before connecting their first account" is.

We defined the Dollet Wallat target audience with precision. "Crypto users" is too broad. "First-time crypto buyers aged 25-40 who've never used a wallet" gives you someone to recruit and design for.

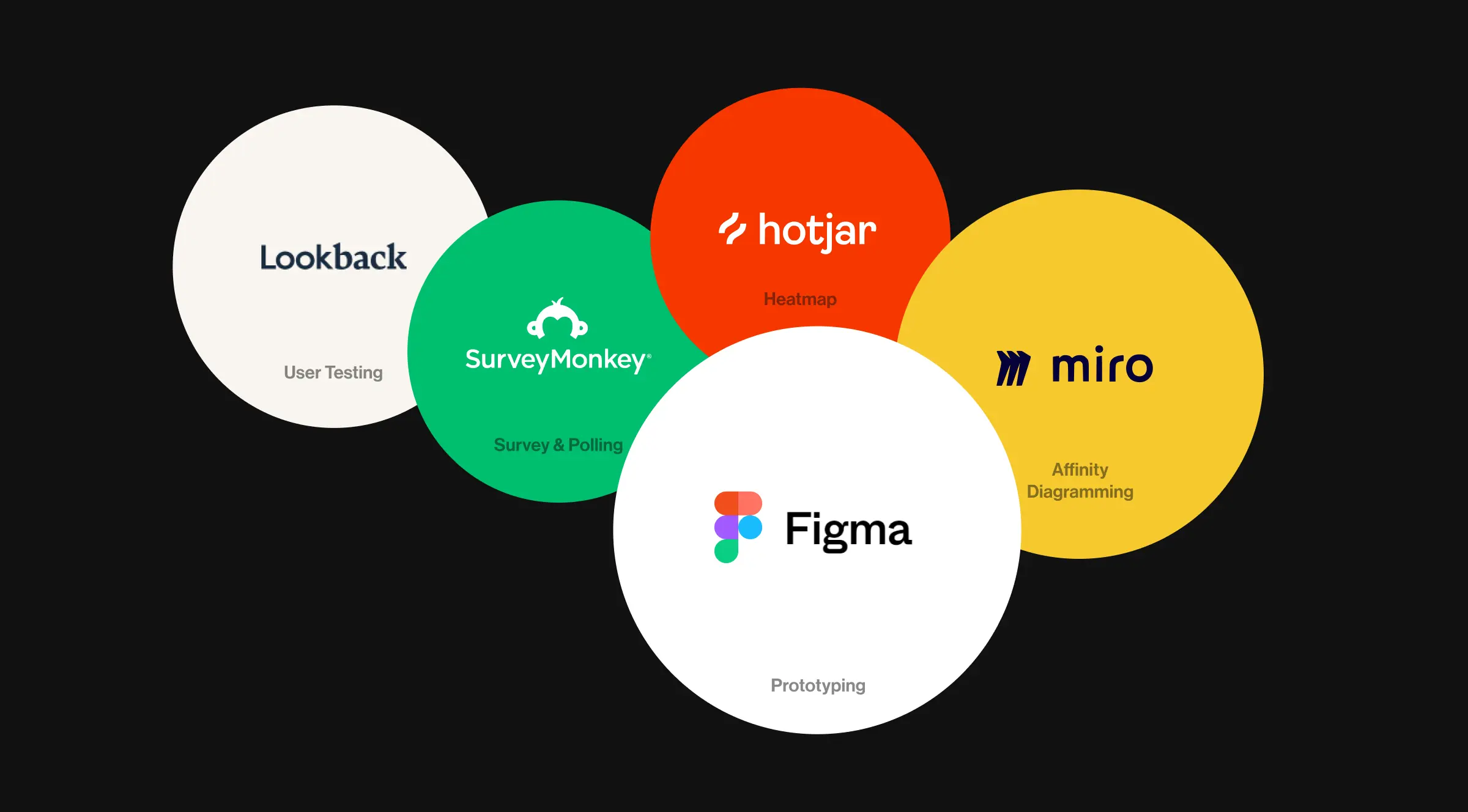

2. Choose your methods and tools

Select UX research methods based on what you need to learn:

- Unknown problem space? Start with user interviews and ethnographic studies.

- Validating a concept? Use usability testing and A/B testing.

- Understanding information structure? Try card sorting and tree testing.

- Measuring behavior change? Set up diary studies or longitudinal studies.

Most projects need a mix. We typically combine qualitative methods (interviews, contextual inquiry) with quantitative validation (surveys, analytics).

3. Recruit real users

Test with potential users who match your target market, not your team's friends or random people on UserTesting. If you're building for CFOs, talk to CFOs. If you're designing for Gen Z, recruit Gen Z.

Sample size matters, but not how you think. Five users will catch 85% of usability issues. Thirty interviews will show you clear patterns. You don't need hundreds unless you're doing market research at scale.

We conducted global UX research with 1,600 participants across three distinct user groups:

- Crypto newcomers: Never owned digital assets, intimidated by terminology

- Beginners: Owned crypto but felt lost managing it

- Professionals: Managed diverse portfolios, needed speed and control

4. Run the research

- For interviews: Ask open-ended questions. Let silence sit, users fill it with insights. Dig into specific stories: "Tell me about the last time you tried to do X" beats "Do you like when apps do X?"

- For usability tests: Give users specific tasks and watch them struggle. Don't help. Don't explain. Their confusion is data. Record sessions so you can identify patterns later.

- For observational research: Watch what they do, not what they say. Users will claim they read instructions. Observation shows they skip straight to buttons and hope for the best.

5. Analyze and synthesize

Data collection is just the start. Now you need to draw conclusions:

- Group similar pain points and behaviors

- Identify patterns across different users

- Separate edge cases from common issues

- Map insights to design opportunities

The research findings we got from Dollet Wallet users were very specific:

- Newcomers dropped off because unclear terminology made them fear they'd lose money. They didn't understand what "gas fees" meant or why some transactions took minutes while others took seconds.

- Beginners wanted to track portfolio performance but couldn't make sense of data spread across multiple wallets and networks. They needed a comprehensive understanding of their holdings without opening five different apps.

- Professionals were frustrated by slow access to advanced features. They knew what they wanted to do but couldn't find the right tools buried in menus designed for newcomers.

✅ What works: We use affinity mapping at Lazarev.agency, sticky notes or digital boards where we cluster observations until themes emerge.

6. Document and share

Create a practical guide your team will actually use. Skip the 60-page PDF. Instead:

- Key insights (3-5 main findings).

- User quotes that illustrate each point.

- Design implications (what this means for your work).

- Recommended next steps.

Share these insights with everyone involved: designers, developers, product managers, stakeholders. Research only works if it changes how the team builds.

The solution you get with a proper design research

The research process gave us a comprehensive understanding of user needs before we touched design tools. Conducting design research with 1,600 participants meant we could identify patterns with confidence and create products that serve multiple audiences without compromising any of them.

Armed with insights generated from research, we redesigned Dollet into a wallet that serves all three groups without compromising any:

- Built a clear onboarding flow that drives retention. Research showed unclear terminology and hidden core features caused drop-offs during the first week of use. We streamlined onboarding with plain language, visual balance tracking, and contextual tips that explain concepts when users encounter them.

- Turned assets into actionable insights. Instead of just listing coins and tokens, we transformed each asset page into a decision-making hub. Users now see market data, performance trends, related strategies, and curated news in one place.

- Improved transaction clarity for faster, safer transfers. Beginners told us they feared sending funds because crypto transactions felt irreversible and the jargon was intimidating. We simplified transfer flows using plain language, clear confirmation steps, and persistent transaction widgets that show real-time status.

- Crafted a portfolio management system for multi-account control. Dollet became the central hub for a user's entire crypto ecosystem. Instead of a tool for one wallet, it's now the command center for their complete digital asset strategy.

That's the difference between design that looks good in a portfolio and design that drives adoption, engagement, and loyalty in the market.

Ready to build a product users actually want? At Lazarev.agency, the best San Francisco design agency, we combine design research with execution from UX research and MVP design to full product relaunches. Our clients have raised over $500M because we don't guess. We research, design, and deliver products that convert. Contact us to start your project with research that drives results.

.webp)

.webp)