As generative AI becomes embedded in every layer of product design from simple prompts to full-blown UI generation, trust is no longer a nice-to-have.

Your AI assistant can process billions of data points. Your design system might produce high-fidelity designs in a few seconds. But if your users don’t trust the interface, they’ll bounce. No matter how many AI tools you plug in.

We sat down with Anna Demianenko, Lead of AI Innovation at Lazarev.agency, to unpack what real trust in AI and user interface design looks like. Anna’s team has worked on AI-first platforms across finance, Web3, and healthcare helping businesses move past the hype and into ethical, scalable AI experiences.

Key takeaways

- Trust in AI starts with the interface — users believe what they can see, control, and understand.

- Transparent UI components like confidence scores, data sources, and feedback loops are essential to building user confidence.

- Personalization must be paired with privacy, giving users control over their data and how it's used in the system.

- Empathy is a two-way street. Designers must consider both user expectations and the realistic limits of AI models.

- Overpromising AI features damages trust, so design teams must ground every AI-driven interaction in real user needs.

Designing trust into AI interfaces is more than a UX challenge

“You’re not just designing an app. You’re creating a relationship between humans and algorithms.”

{{Anna Demianenko}}

That relationship lives and dies in the user interface. If a system’s behavior is vague or unpredictable, users hesitate or disengage completely.

To avoid that, a trusted user interface (TUI) must:

- Clearly explain what the AI is doing

- Show how it was generated, what data was used, and where the boundaries are

- Offer control and transparency at every key step

- Protect user data without sacrificing usability

Anna puts it simply:

“Good UX is predictable, explainable, and emotionally safe.”

{{Anna Demianenko}}

How AI can become a trust feature

Most founders ask: “Can AI improve my interface?”

But the better question is: “Can the interface make AI feel trustworthy?”

According to Anna:

- AI can increase trust by detecting risk patterns, like fraudulent logins or user anomalies

- It can enhance UX by predictively suggesting actions based on history or context

- But only when it’s integrated into user interfaces that respect boundaries

“If your AI interface is smart but unpredictable, users will avoid it. If it’s predictable but dumb, they’ll ignore it. You need both.”

{{Anna Demianenko}}

This is where trusted UI components come in like model confidence indicators, transparent personalization, and explainable feedback loops.

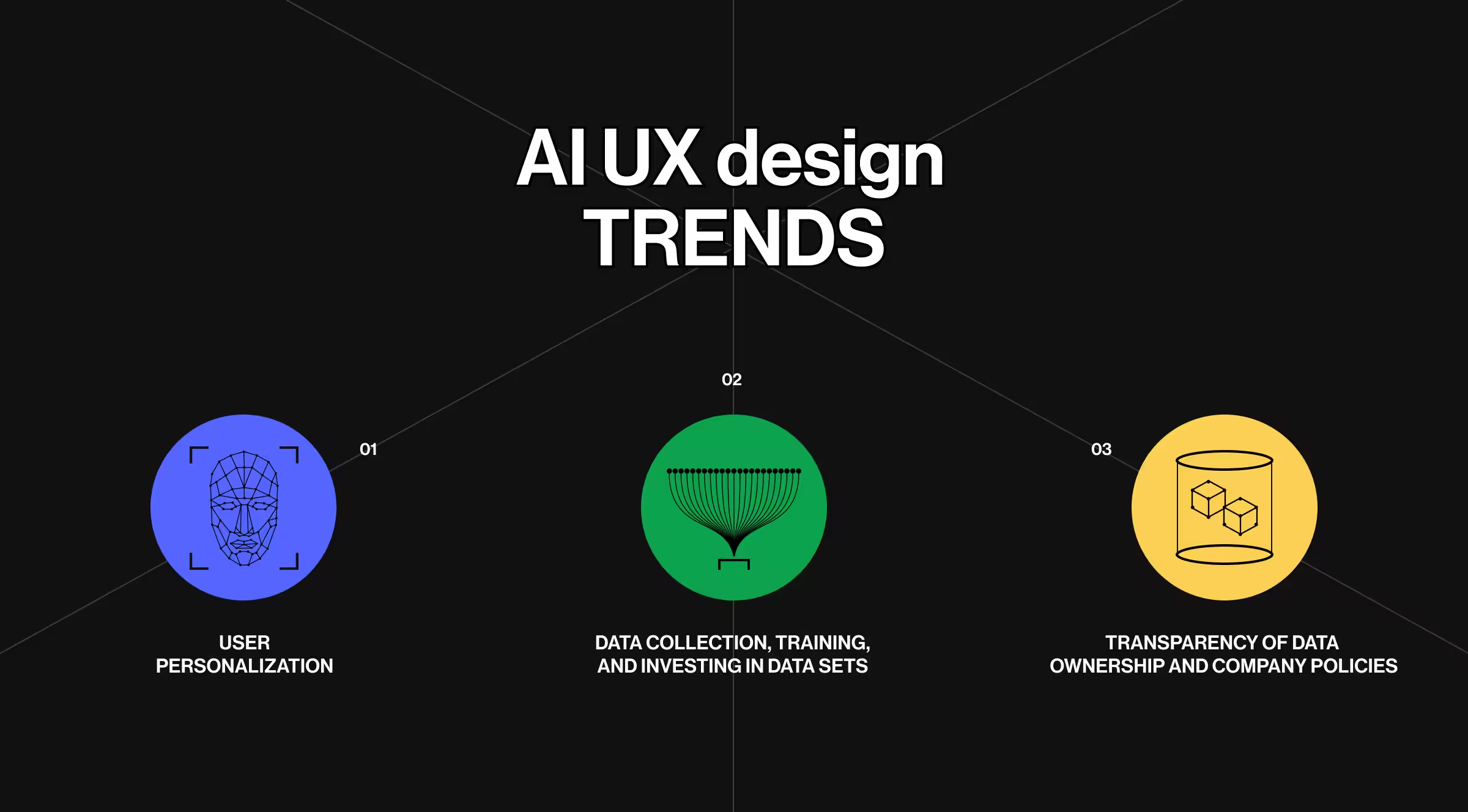

The most important AI UX trends you’re probably ignoring

The real evolution of AI product design and user interface design isn’t happening in prompt libraries or datasets. It’s happening in user expectations.

Here’s what Anna sees shaping modern UX:

1. Hyper-personalization that doesn’t cross the line

“We’ve reached the point where AI knows what users want before they do. But that’s only helpful if the interface explains how it got there.”

{{Anna Demianenko}}

Designers must build personalization logic that’s:

- Explainable (“We recommended this because of your past behavior.”)

- Controllable (users can opt out, reset preferences, or view history)

- Consent-driven (data isn’t just collected — it’s offered)

2. Investment in clean, ethical data

“Your interface is just the final layer. The quality of the AI experience starts with the dataset.”

{{Anna Demianenko}}

Anna points out that AI UX often fails because the system’s decisions are built on biased or outdated data. Even if the UI is intuitive, AI models that don’t reflect user realities destroy trust fast.

That’s why great UX design must also include:

- Data vetting processes

- Inclusive examples for training

- Interface messaging that admits limitations (“This model is trained on data from 2023 only.”)

3. Transparency-first design

With Gen Z entering the workforce and demanding accountability, interfaces need to move away from vague automation and into visible AI reasoning.

Anna explains:

- “Designers need to show how confident the system is, what its knowledge base is, and what it can’t do.”

- “If the AI pulled content from five sources, give the user links.”

- “If the model is unsure, tell the user.”

Empathy goes both ways: users and machines

Anna proposes a powerful mental model:

“If your intern couldn’t complete the task, your AI probably can’t either.”

{{Anna Demianenko}}

This insight leads to better AI transformation implementation designing systems that fail gracefully, communicate clearly, and don’t overpromise. It helps avoid edge-case disasters that erode trust.

But the flip side is just as important: AI must also learn empathy for the user.

That happens through:

- Tracking interactions (e.g., misclicks, form re-submissions)

- Analyzing behavior (e.g., users repeating prompts)

- Responding in real time (e.g., “Sorry, we didn’t get that right. Let’s fix it.”)

Anna’s team has implemented these loops in chatbots, AI-driven UIs, and personalized dashboards where AI detects when it caused confusion and adapts the interface dynamically. More AI/ML cases here.

The hidden threat: AI products that overpromise and underdeliver

Right now, the AI space is crowded with tools that have no product-market fit.

“Investors love it. Users don’t,” Anna says. “We’re seeing ‘AI’ slapped onto everything but most of these products don’t solve actual user problems.”

This over-saturation leads to market fatigue. Even well-designed systems risk being dismissed because users have been burned before.

The solution is design teams must get honest:

- Do the research before shipping AI

- Test assumptions with real user behavior

- Prioritize function over novelty

How to design transparent AI interfaces (a tactical framework)

Transparency is a new UX pattern so far.

Anna offers a checklist that every designer should use before implementing AI-powered features in a product:

✅ Define what the AI can and can’t do

✅ Decide whether to show confidence scores and how to explain them

✅ Surface the sources behind the output (articles, datasets, timestamps)

✅ Give users visual cues during onboarding and task flow

✅ Gather feedback and close the loop visibly (“Thanks. We’ve updated this feature.”)

“Transparency isn’t just telling the user something. It’s showing how the system learns, makes decisions, and respects them.”

{{Anna Demianenko}}

🔍 If you're exploring how to make your AI feel more intuitive and human, check out our blog on designing human-like interfaces. It dives deeper into what real connection looks like in AI UX.

Ethics, feedback, and control are new foundation principles of AI UX

Ethics begins with the dataset

“Designers may not touch the data, but they must ask where it came from, who it excludes, and how it aligns with company values.”

{{Anna Demianenko}}

Feedback isn’t optional

You’re not done when you collect feedback. You’re done when you show the user what you did with it.

Examples Anna gave include:

- Sentiment-aware interactions (“Looks like that wasn’t what you expected.”)

- Behavior-based triggers (“You clicked three times. Let’s help.”)

- Regeneration flows based on error signals (“We noticed confusion. Here’s a better answer.”)

Personalization with privacy

Designers can’t build encryption, but they can build control:

- Permission toggles

- Data visibility (“Here’s what we stored. Delete it anytime”)

- Consent reminders during major interactions

Don’t apply old UX rules to a new AI system

One of the biggest mistakes we see? Teams try to drop AI into traditional UX frameworks without rethinking the fundamentals.

But AI doesn’t follow fixed flows. It learns, adapts, and evolves. That means your interface isn’t just a layout anymore, it’s a system in motion. The way users interact with it changes based on behavior, history, and context. So if you’re still mapping out static screens and expecting AI to “just fit,” you’re setting the product up to fail.

Let’s break it down:

If you’re building with AI, design like it. Treat the interface as an evolving dialogue. Get that right, and the product becomes easier to use, easier to trust, and far more scalable.

Final thought: the most trusted AI interfaces will be the most human

Trust doesn’t come from branding. It comes from clarity, empathy, and behavior.

“If your system explains what it’s doing, listens when it gets it wrong, and improves over time, users will trust it. Even if it’s not perfect.”

{{Anna Demianenko}}

As generative AI continues transforming how we design, generate, and scale user interfaces, the only way forward is human-centered, ethical, and interface-based.

That’s the future of AI and user interface design. And it’s already here.

Want to build an AI product users don’t just use but believe in? Let’s design that trust together.

.webp)