When a product team finally sees exactly where users stumble, priorities snap into focus. That clarity starts with choosing the right tools for UX research.

In this guide we group the most popular platforms into 12 purpose-driven categories, show when each shines, and reveal how Lazarev.agency applies them in practice.

Expect a concrete playbook you can copy, backed by two fresh client stories from Mannequin and HiTA.

Key takeaways

- Map platforms to research stage × team constraints before price.

- Blend quantitative and qualitative data for truly actionable insights.

- Mannequin’s AI-fashion site raised engagement after targeted prototype testing; HiTA’s EdTech platform improved metrics after a lean mixed-methods research process applied.

Best user research tools by category

To cut through the noise, we’ve mapped 12 tool categories to each research phase. If you’d rather get a tailored stack of UX research software, our UX research services team can build one for you.

🔍 Pro tip: For remote usability testing on early concepts, run a <5-task Maze study first. If metrics look promising, schedule user interviews in Lookback to dig into reasoning. This mixed-methods research with the right UX analytics tools surfaces both why and how big issues are without bloating timelines.

What about AI-powered tools in UX research?

According to Nielsen Norman Group’s 2024 report “Accelerating Research with AI”, current AI user research apps and add-ons help with planning and transcript summarization, yet they still can’t watch usability tests, process video, or factor researcher context. This leads to vague, sometimes biased recommendations.

From insight-generator chatbots that mis-cite their sources to “AI collaborators” that ignore study goals, today’s AI-powered UX research software struggle with visual cues, mixed-method research data, and reliable attribution. Until those gaps close, treat AI as an accelerant and rely on human synthesis for nuanced, actionable insights.

🔎 For a deeper look at how AI intersects with UX, see our piece: How AI influences design and the reciprocal impact of UX on AI-driven products.

Decision-making guide — choose by team size & budget

“Tool choice is really a function of two levers — headcount and runway. Align those first, and insights start paying for themselves.”

{{Kirill Lazarev}}

“Most teams over-buy software and under-buy clarity. Right-size the toolkit to your bandwidth, then iterate. A solo designer with the right tools can out-learn an enterprise drowning in licences.”

{{Anna Demianenko}}

🔍 Pro tip: When you own participants (e.g., enterprise customer councils) plug that list into User Interviews’ “private projects” for custom pricing and higher-quality feedback without third-party NDAs.

UX research resources bundles

Each bundle balances quantitative user behavior tracking with narrative qualitative research, delivering actionable insights within days with these UX research resources.

Comparison table — feature vs. use case

No single platform does everything well. Use this quick comparison to plug the gaps in your current stack without bloating it. Refer to the comparison table if your use case requires scale vs. speed.

Tool showdown: comparing the top UX research tools

Not all UX research tools are created equal, and choosing the right one depends on your team size, product stage, and research method. Below, we compare five of the most searched tools for UX research to help you find the best fit fast if you still have doubts regarding what you need.

Maze vs. Useberry

Best for: Prototype testing and unmoderated usability studies

📌 Verdict: Maze offers broader testing formats and real-time metrics, while Useberry excels at visual feedback for early-stage prototypes.

Dovetail vs. Condens

Best for: Organizing, tagging, and analyzing qualitative UX research data

📌 Verdict: Dovetail is ideal for scaling research ops, while Condens suits teams who want AI-assisted segmentation with flexibility.

Hotjar vs. FullStory

Best for: UX analytics and user behavior tracking at scale

📌 Verdict: Hotjar wins for simplicity and fast setup, while FullStory is the heavyweight for detailed UX behavior mapping.

Lookback vs. UserTesting

Best for: Moderated UX testing with real users

📌 Verdict: Lookback is great for agile in-house research, while UserTesting suits larger orgs with budget and scale in mind.

Typeform vs. Google Forms

Best for: User surveys and feedback collection

📌 Verdict: Typeform boosts engagement for external feedback, while Google Forms is a no-cost tool for quick validation.

How to use these UX research tool comparisons

- Choose based on your stage: early MVP vs. growth analytics

- Filter by team type: solo, startup, agency, or enterprise

- Balance cost vs. insight: don’t overbuy features you won’t use

❓Want help selecting your stack? Talk to our UX research experts. We’ll audit your current tools and recommend better fits for you.

Real-world research stacks from Lazarev.agency

Below you’ll find 2 compact snapshots: Mannequin and HiTA. They show the same tool-selection logic working in very different contexts: an AI-fashion startup selling visuals online and an EdTech platform refining a learning assistant. Each story walks through the problem, the research stack we chose, and the results, proving that the 12-category framework scales from lean MVP tests to enterprise-grade road-mapping.

Case study #1 — Mannequin: AI fashion studio

Mannequin replaces traditional apparel photoshoots with AI-generated models for DTC brands. They arrived with breakthrough tech but no digital platform to prove value. Their core challenge: communicate a complex hybrid workflow (real clothing + AI) in a sales-ready story.

Our approach:

- Discovery: short online surveys to segment B2B buyers.

- Prototype testing: five Useberry click-path tests uncovered drop-off between hero demo and pricing; we rewired the narrative flow.

- Analytics & iteration: post-launch Google Analytics 4 confirmed visitors now scrolled deeper and hit the order CTA more often.

- Documentation: Grain highlight reels helped align copy, 3D visuals, and dev sprints.

Outcome: A story-driven 3D-style landing (built entirely from 2D assets) that drives sales and positions Mannequin as an AI-fashion pioneer.

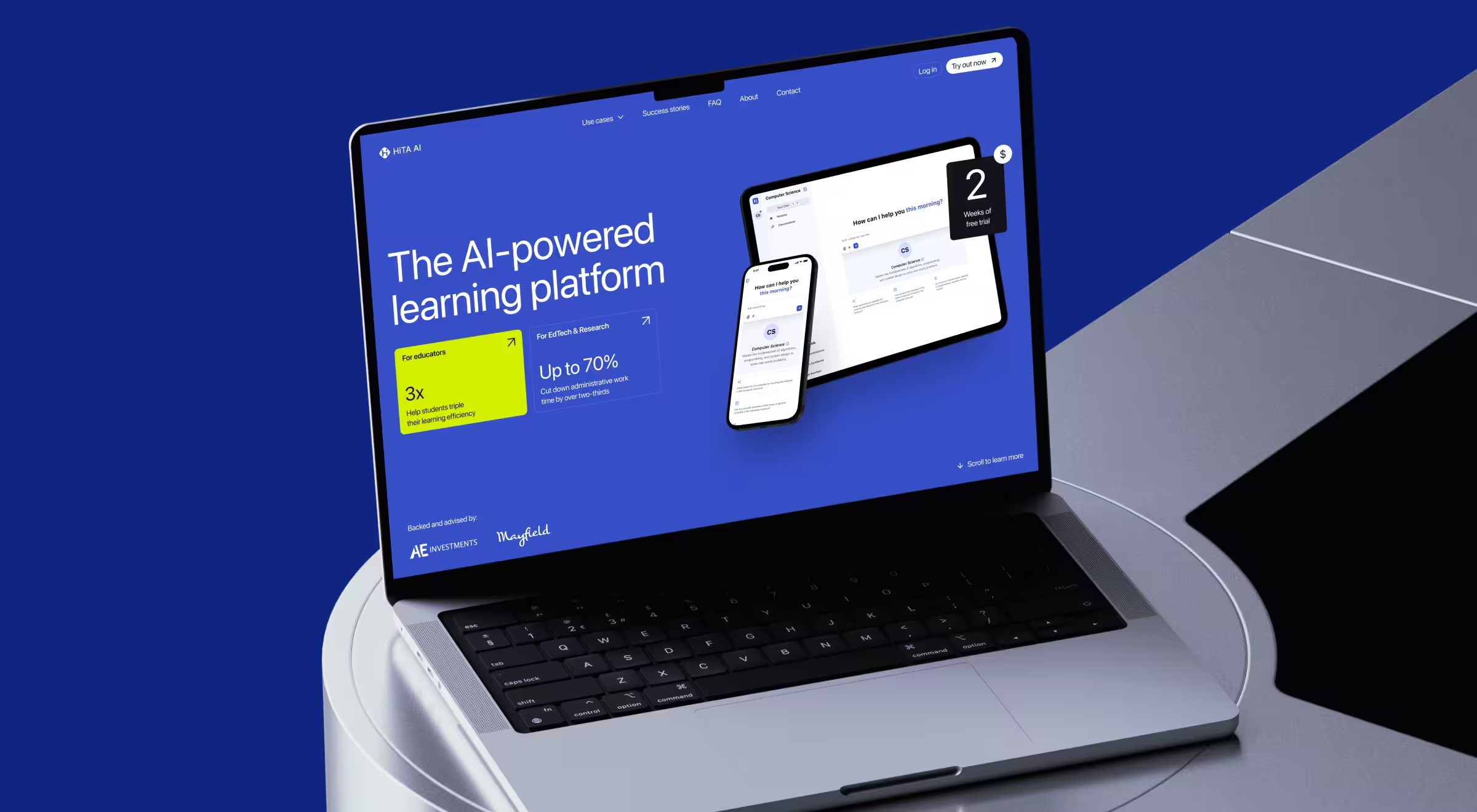

Case study #2 — HiTA AI: EdTech assistant scaling to 2000+ users

HiTA is an AI-powered learning platform whose chatbot offers hints, not answers. They wanted to boost sales and craft a long-term roadmap.

Our approach:

- Wizard-of-Oz prototype testing — researchers manually responded as the chatbot while HiTA Analytics clustered intents.

- User interviews with educators revealed pain around admin workload.

- Mixed methods research combined quantitative data (chat logs, funnel metrics) and qualitative feedback to prioritise features.

- Promo landing redesign distilled benefits: “3× faster learning” + “70% admin cut”.

Outcome: A new website and research strategy that drives sign-ups and guides product decisions.

🔍 Pro tip: For startups in education or healthcare, pair Wizard-of-Oz testing with prototype testing in Maze to validate conversational AI before building costly ML pipelines.

The 12-category framework, the decision grid, and the bundled stacks all point to one lesson: tool choice matters only when it accelerates insight.

The sequence stays the same: recruit the right participants, mix quantitative and qualitative data, loop findings back into design, and document everything for future sprints. Mannequin and HiTA prove that disciplined tooling shortens feedback cycles, sharpens product strategy, and turns research into revenue rather than overhead.

Ready to match your research stack to your roadmap?

Contact our UX research team about selecting, integrating, and validating the best user research tools for your next release.

We’ll audit your current workflow, recommend a right-sized stack, and guide your first study from kickoff to insight hand-off. We’ll handle participant recruitment, platform setup, and mixed-method analysis so your team can focus on building features that land.

Let’s match your research stack to your roadmap and launch the next release with data-backed confidence!

.webp)