There’s been an explosion of AI-powered tools in recent years, from writing assistants to search companions and workflow bots. Many look promising and work well on the surface. But take a closer look, and you’ll find a recurring pattern: old products with a ChatGPT window slapped on top.

This isn't an AI transformation. It’s a missed opportunity and surface-level design.

In this article, we sifted through examples and insights to understand what really makes AI valuable in product design. Along the way, we started noticing a deeper issue — one that’s become increasingly frustrating. “AI” or “language models” (terms that deserve their own article) are not about adding a chatbot to your interface. It’s about designing workflows where AI is the product, far beyond a surface-level feature meant to impress.

If you're serious about building with AI, the question to ask is: What is now possible that wasn’t before? From there, rethink the experience entirely.

Let’s explore what separates surface-level integrations from truly AI-first design principles.

Key takeaways

- AI as a widget can’t compete with a product designed around AI. The true value comes when AI transforms the entire product experience, not just when it’s layered on top with a chatbot or assistant.

- Expose and personalize system prompts. Let users define tone, behavior, and task priorities for AI agents.

- Focus on tools. Empower users to create, refine, and adapt AI agents to their needs.

- Avoid the “horseless carriage” trap. Don’t retrofit old UX patterns to accommodate AI, reimagine the experience from the ground up to fit new possibilities.

Why outdated UX limits AI’s potential

Take Gmail. It’s a great case study of how even tech giants are cautiously integrating AI into existing products, sometimes to a fault.

Gmail’s integration of Gemini allows users to draft emails using a ✨ “Help me write” button. It’s handy until it’s not. Why?

Gemini generates content based on a system prompt hidden from the user. You can’t see it. You can’t edit it. If the tone feels off — too formal, vague, or cliché — you’re stuck. There’s no easy way to fix it. In fact, writing the email manually might be faster than wrestling with the assistant or crafting the perfect prompt.

This is what happens when AI is added after the interface is built. Users have no control, feedback loops break down, and AI becomes just another button, one that’s occasionally useful, but far from transformational.

💡Insight: Legacy UX tends to be rigid and linear. AI works best when the interface is flexible, iterative, and adaptive. When the goal is a real AI experience, surface-level additions like a star-shaped button fall short. What matters is reworking the system.

Reveal and customize the system prompt

The system prompt is like the invisible manual guiding your AI transformation. In many cases, it’s more important than the model itself.

If that sounds familiar, it’s because it echoes the fundamentals of UX: when something requires a detailed manual, it’s often a sign of poor design. Good design, as Pete Koomen (co-founder of Optimizely) puts it, should feel invisible.

Here’s why system prompts should be open and editable:

- Personalized tone: Let users choose whether they want responses to be friendly, formal, witty, or brief.

- Domain relevance: A lawyer and a content marketer need different defaults, hence tools like OpenAI’s GPTs, which tailor prompts to specific use cases.

- Building trust: Making the AI’s “thinking” visible demystifies the process and helps users see it as a co-pilot rather than a black box.

💡Insight: Trust in AI is limited, with 54% of people expressing caution, especially in advanced economies. Yet, acceptance remains relatively high. The findings highlight that trust increases when AI is supported by strong regulation, transparent governance, and organizational assurance mechanisms that demonstrate responsible use.

And no, this doesn’t mean exposing raw JSON. You can design a simple interface where users adjust tone, choose personas, or give feedback on agent behavior. Train it once, and it adapts. That’s the real value.

What trust looks like in AI and how Lazarev.agency designs for it

At Lazarev.agency, we recently worked on an AI-powered platform in the legal domain, a field where trust and factual accuracy directly impact people’s rights. The product needed to deliver legal and civil rights guidance using AI without risking hallucinated answers, outdated statutes, or misapplied interpretations. To solve this, we designed a multi-layered trust framework:

- All AI responses are backed by a curated, isolated database

- Each result includes linked sources

- Before delivery, answers pass through a moderation workflow guided by legal experts

We also exposed the system prompt logic so domain specialists could control the tone, scope, and reference model helping the product build confidence with both institutions and everyday users. In domains where error carries legal or reputational consequences, trust is the foundation.

Another example of thoughtful, high-impact AI design is our work with Accern Rhea, a platform that helps financial analysts monitor and assess risk using natural language processing. We partnered with the Accern team to rethink how users interact with real-time data, integrating contextual AI to simplify everything from search to reporting.

Instead of presenting generic answers, the product was designed to surface insights from verified news sources and industry databases, tailored to user roles and sectors.

As a result, we’ve delivered a powerful, AI-first experience that increased analyst efficiency while preserving the rigor and accuracy required in high-stakes financial environments.

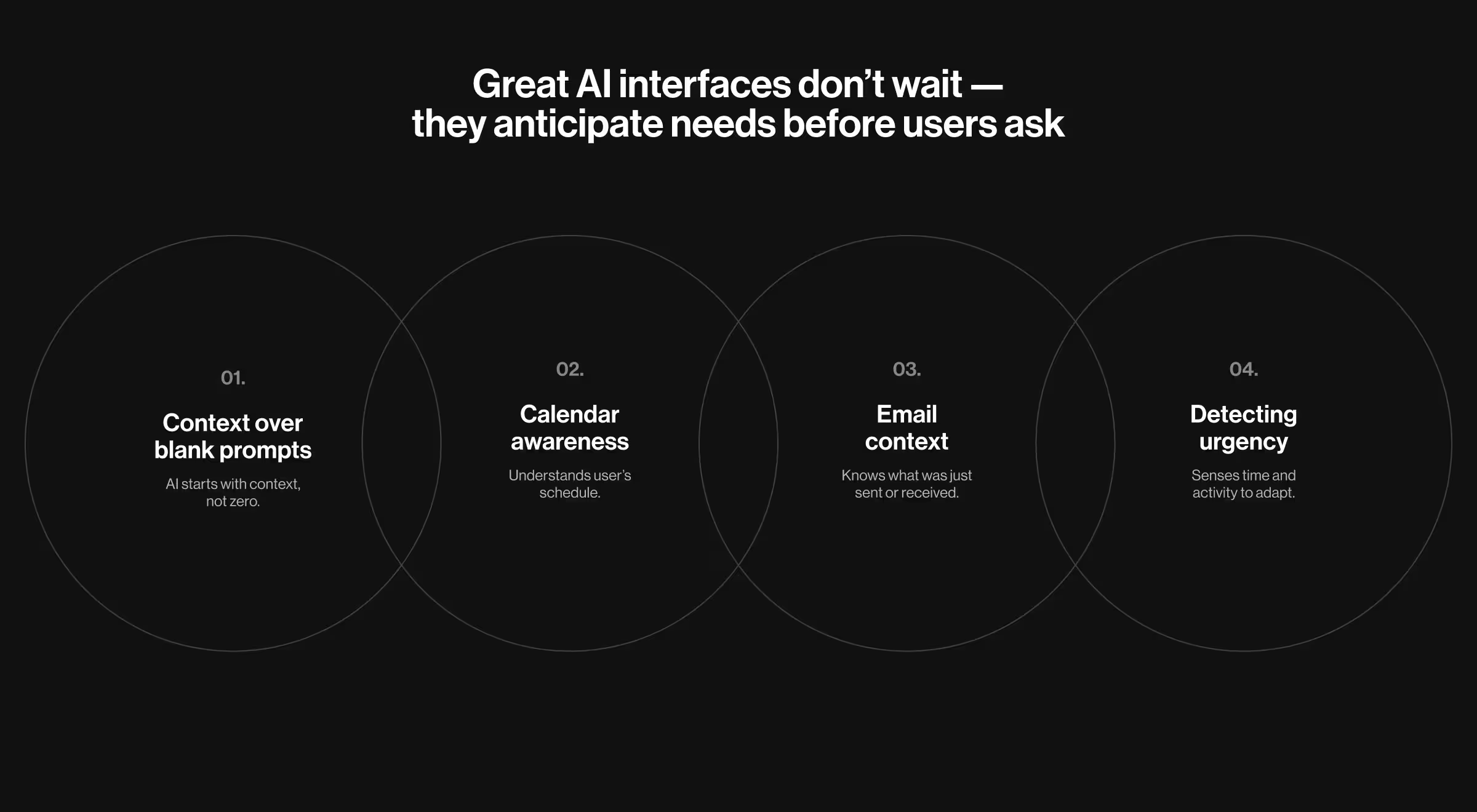

Design for anticipation

Great AI interfaces don’t wait for commands. They anticipate needs before users ask.

Rather than a blank prompt, AI-first products use context:

- What’s on the user’s calendar?

- What emails were just sent?

- Is the user in a rush, based on time of day or app activity?

Imagine an email assistant saying: “Looks like you're following up after a call with Jane from Stripe. Want me to draft a thank-you with the key takeaways?”

That’s not sci-fi. That’s what happens when AI connects to calendars, emails, and user context. It’s already here, and products that embrace this now will win.

“AI shouldn’t be guessing; it should be informed, because we’ve taught it where to look.”

{{Kirill Lazarev}}

This approach requires:

- Cross-platform data awareness

- Smart triggers (not just manual input)

- UX that surfaces AI suggestions proactively, not buried behind clicks

Even Gmail shows glimmers of this. When threads get long, it suggests a summary. That’s anticipatory design — AI acting before the user asks.

Tools over features: let users build their own agents

Most teams build features. Smart teams build tools.Think of AI's evolution less as a finished product and more as a creative sandbox where users hold the shovel.

Think about this:

- Cursor: Lets devs create AI co-pilots for coding.

- Windsurf: Enables end-users to build agents from prompt templates.

- Zapier + OpenAI: Offers no-code automation for internal ops.

When users create workflows in natural language, they stop being passive consumers and become creators.

Designing tools means:

- Allowing users to combine inputs (text, context, history)

- Supporting custom prompts and instructions

- Enabling teams to save and share workflows

Don’t fall into the “horseless carriage” trap

The first cars looked like carriages without horses. The first mobile apps looked like mini desktop sites.

We’re seeing the same mistake with AI: old workflows + new tech. Retrofitting AI into existing products without rethinking UX is a waste of potential.

“Adapting isn’t enough. Transform. The best AI products are those that couldn’t function without it.”

{{Kirill Lazarev}}

According to McKinsey’s 2024 report, The State of AI, 72% of companies now use AI in at least one function, and 65% have implemented generative AI — double the rate from last year. Yet, only 1% consider themselves “AI mature,” having moved from pilot programs to scalable impact.

The gap between investment and outcome is stark. While 51–66% of teams report local wins from AI, few attribute more than 10% of EBIT to it.

What’s the truth? The tech is there. But without intentional design, transparency, and control, AI fails to deliver long-term value.

📚 Extra resource: The term “Horseless Carriage” was popularized by Pete Koomen, a GP at YC and co-founder at Optimizely. We highly recommend reading his essay on the topic.

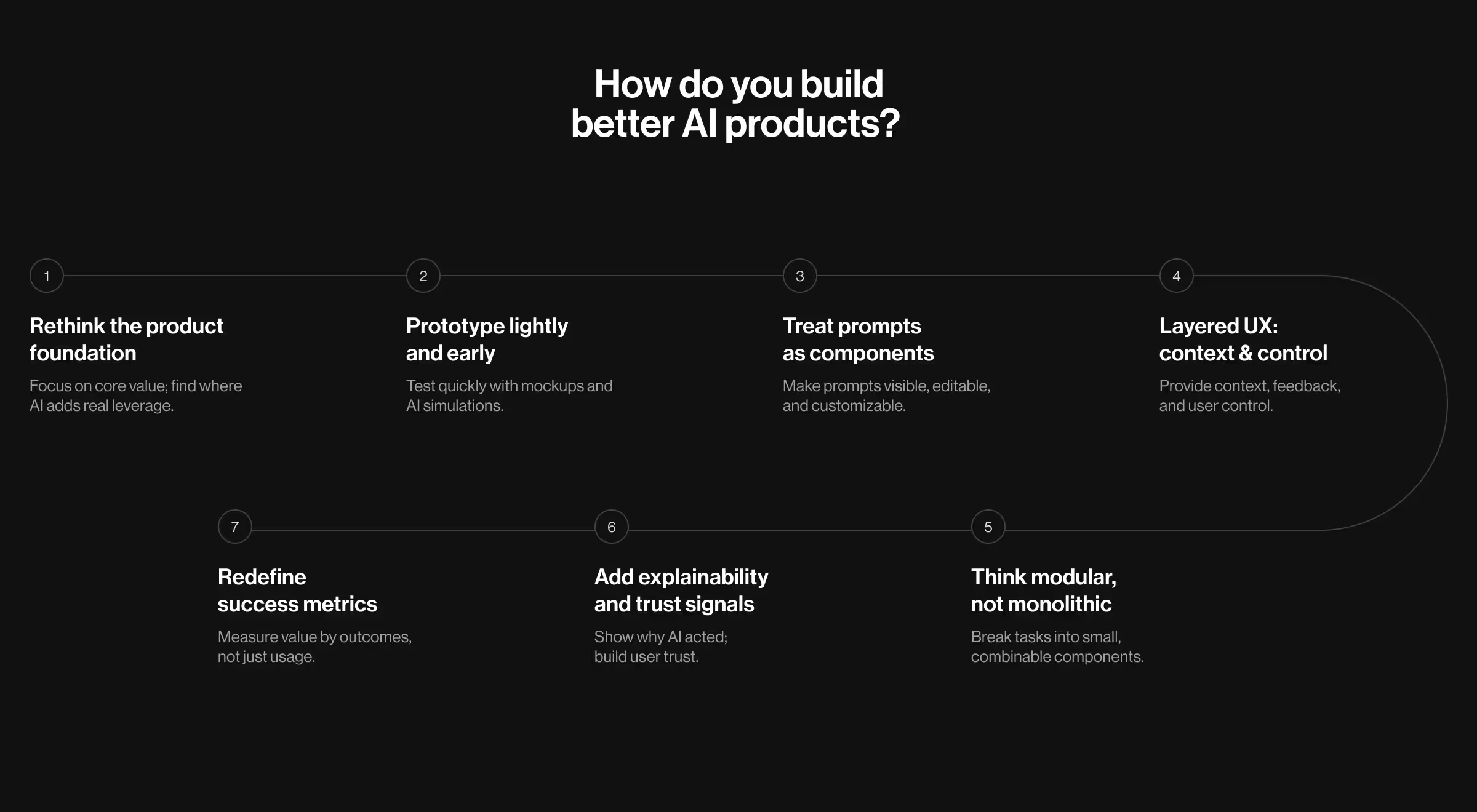

So how do you build better AI products?

1. Rethink the product foundation

Start from first principles. Pinpoint what truly bothers users and their essential jobs-to-be-done. Don't just try to bolt on AI.

- Audit workflows: Map friction points, decision trees, repeatable tasks.

- Find AI leverage: Ask yourself: “Will this be faster, smarter, or more scalable with AI?”

- Go beyond integrating AI into features: Rethink how work is done.

2. Prototype lightly and early

You don’t need full development to validate LLM-powered tools.

Use tools such as:

- Figma or UXPin for interface mockups

- ChatGPT or Claude to simulate logic

Test for:

- Input/output alignment

- User control vs automation

- “Whoa” moments of unexpected value

3. Treat system prompts like product components

Most LLM behavior happens before the user types. That’s your system prompt.

- Make it editable or visible

- Let users define tone, goals, task scope

- Offer personas or sample prompts to customize behavior

4. Build layered UX: context, feedback, control

AI UX isn’t just an input field. Design processes where AI learns, adapts, and visibly improves.

Great products include:

- Context: User history, metadata, external APIs

- Feedback: Let users rate, edit, and adjust AI output

5. Think modular, not monolithic

Design with “particles” — small, atomic tasks AI can handle:

- Summarizing content

- Translating tone

- Categorizing feedback

Later, combine them. Modular AI products are:

- Easier to test

- Safer to launch

- More adaptable across industries

6. Add explainability and trust signals

Users hesitate when they don’t understand why AI did something.

Use:

- Source citations

- Short “why” blurbs (e.g., “Because Jane asked last week”)

7. Redefine success metrics

AI product success ≠ daily active users.

Instead, track:

- Time saved per task

- Success rate of AI-supported actions

- Prompt diversity (one use case or many?)

- Number of agents or workflows created by users

Final thoughts: AI won’t fix a broken product

AI won’t replace clarity of purpose, good UX design, or a strategy that puts real user needs first. The difference between AI that dazzles for a week and AI that delivers long-term value lies in how you build it: not as a gimmick, but as a system.

Designing better AI products means designing for transparency, trust, and transformation. It’s about offering real control, meaningful context, and visible value, instead of dressing up autocomplete as innovation. And it requires bold decisions choosing to rebuild workflows instead of tweaking around the edges.

If you’re looking to hire experienced AI designers, we’d be happy to help.

.webp)