Building a new product without UX testing is like adding yet another streaming app to an already overcrowded home screen — no one notices until something breaks.

Streamingbar, a startup determined to become a single, social hub for film lovers, felt that pain early: users bounced between Netflix, Hulu, and Amazon while the founders juggled 164 feature ideas and no data to show which ones mattered.

Rather than shipping on guesswork, they turned to Lazarev.agency for a focused website usability testing initiative. Discovery interviews and competitor audits surfaced the real blockers; structured tests trimmed the backlog to ten evidence‑backed hypotheses and spotlighted four high‑impact features: a unified search, lightweight social feed, personal stats dashboard, and cohesive brand language. Clear priorities replaced noise, letting the team design with confidence and avoid costly rework.

This guide shows how to improve your product website usability testing fast, using the same playbook.

Key takeaways

- Discovery first. For Streamingbar, 20 user interviews, a 6‑competitor audit, and 40 must‑have items produced 10 testable ideas — trimming 154 low‑value concepts. Your product will include a unique approach still with the discovery step first.

- Decision‑driven tests. Each hypothesis had a metric and “kill/keep/scale” rule, so results flowed straight into backlog triage.

- The tool stack grows with maturity. Start with lightweight remote usability testing and scale to eye‑tracking or split‑URL experiments as culture shifts from opinion to evidence.

- Common pitfalls. Testing too late, wrong KPIs, bias recruiting, ignoring synthesis — avoid them and you will feel the lift in user satisfaction.

Move from assumption to hypothesis

Early user research mapped user pain points: app‑hopping to find films, social FOMO, and endless scroll frustration. Nielsen Norman Group reminds us that the earlier the research, the more impact the findings will have on your product.

See how the raw inputs distilled into an actionable test plan for Streamingbar:

“Evidence beats intuition; slicing ideas to ten gave engineering one clear sprint.”

{{Oleksandr Koshytskyi}}

Design a lean test plan

Usability testing methods vary, but Streamingbar kept the plan tight:

A concise usability testing script reduces moderator bias and lets teams compare quantitative data across cycles.

User testing platforms stack

Choose user testing platforms that match budget and risk:

🔍 Pro tip: Start with remote user testing: no travel, wider target users, quick iteration.

Usability testing tools to start in 14 days

Need a quick launch path? Use the timeline below to see how a small team can plan, run, and iterate a round of UX testing in just two weeks, without pausing the main development sprint.

🔍 Pro tip: Use these usability testing tools first; enterprise suites can wait until the team trusts the loop.

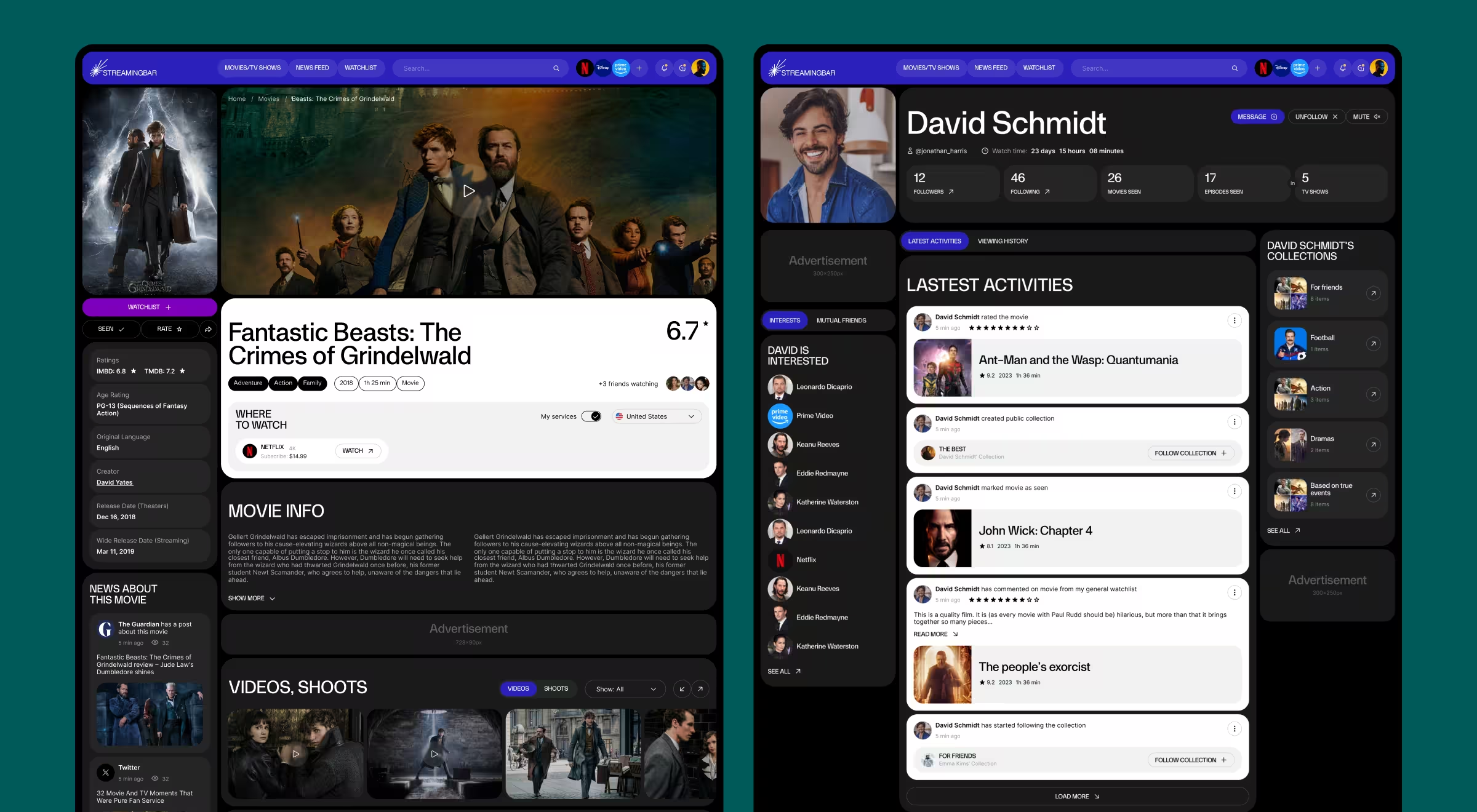

Usability testing examples from Streamingbar

Streamingbar killed flashy but wasteful ideas like AR posters and live co‑watch chat, banking on four winning bets instead:

- Unified cross‑platform search

- Lightweight social feed

- Personal stats dashboard

- Cohesive brand identity

Result: Usability sessions showed that real users completed the target “find‑and‑play” task confidently; qualitative notes flagged only copy tweaks, no flow overhauls — evidence that users interact smoothly before code freeze.

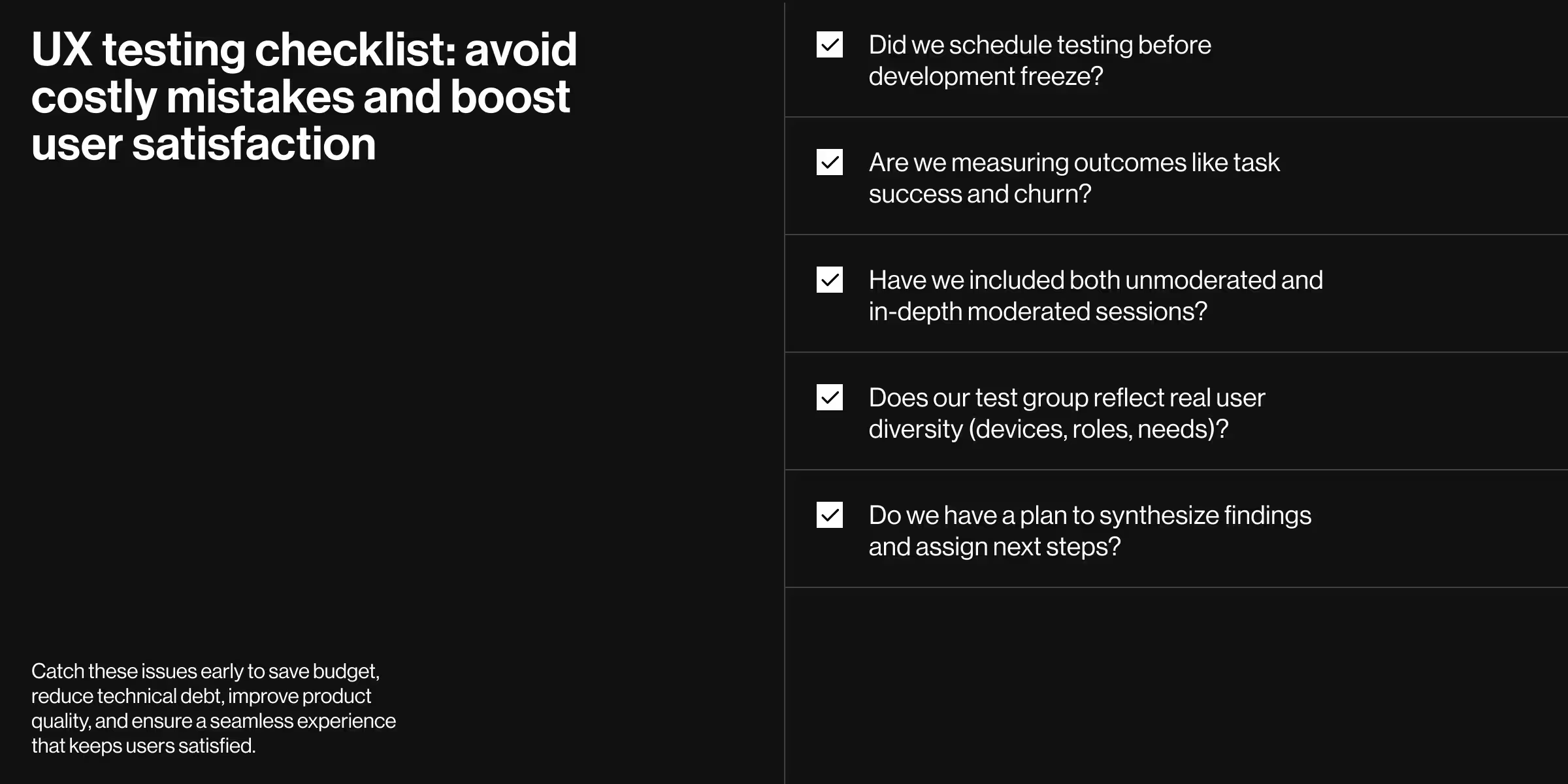

Common UX testing mistakes to avoid

Even seasoned teams slip up when they conduct usability testing under deadline pressure. Use the cheat sheet below as a reality‑filter before every research cycle; catching these pitfalls early will save budget, credibility, and most importantly — user satisfaction.

- Testing after dev freeze fixes morph into costly tech debt once code is merged.

- Wrong KPIs: Vanity clicks ≠ positive user experience; track task‑completion, churn, and revenue impact instead.

- One‑and‑done unmoderated usability testing — always pair scale with depth; qualitative sessions reveal why users struggle.

- Homogeneous panels — recruit diverse test participants to reflect both mobile and desktop target users.

- Skipping synthesis — raw numerical data is just noise if you don’t prioritise issues and assign owners.

Address these five traps, and every round of UX testing becomes a compounding asset in your product development lifecycle.

❓ Want a reality check on what happens when teams skip early research? Read our deep dive on the risks of skipping UX research.

UX testing maturity model

The matrix below lets the product team benchmark its current practice and spot the next, realistic upgrade, before processes stall or budgets dry up.

🔍 Pro tip: Climb one rung per quarter; any slower and usability issues hide in the backlog.

Where to learn more usability testing methods

For a fast overview of nearly every test format in the playbook, watch the “101 types of UX testing & the benefits” video from Maze — it’s a concise 12‑minute refresher you can share with the whole product team. In short:

- Qualitative usability testing focuses on depth — think five in person usability testing sessions with think‑aloud tasks.

- Quantitative usability testing focuses on scale — hundreds of remote usability recordings to spot patterns.

Blend both to cover blind spots and validate that users struggle less with every deployment.

Turn metrics into momentum

Disciplined UX testing shrinks risk, boosts user satisfaction, and accelerates the product development lifecycle.

Curious how these frameworks could reshape your roadmap?

Lazarev.agency’s UX research team will conduct usability testing, turn user feedback into actionable insights, and wire evidence into your next sprint.

Explore our services or book a discovery call — let’s translate your data into design wins!

.webp)