To build complex digital products, you need skilled partners who can identify pain points, turn user feedback into design decisions, and ship fixes on tight timelines. Teams can do this in-house, but external partners bring speed, unbiased recruiting, and sharper synthesis.

This guide compares usability testing companies and platforms, clarifies what great user testing looks like in practice, and shows why Lazarev.agency stands out with a case that proves the approach.

Who will benefit: product leaders, design directors, and founders shipping AI, fintech, or B2B digital products. Teams that need evidence-driven usability research built into the product development process.

Key takeaways

- The best usability testing programs mix moderated and unmoderated sessions. They use both remote and in-person formats, and blend qualitative discovery with quantitative research.

- Expect hard evidence: success rates, time on task, clips of where users stall, journey notes, heatmaps that show where users click, and an ordered list of actionable recommendations.

- Outsourcing beats in-house when you need the right participants fast, unbiased feedback, and synthesis that moves a sprint.

- See it applied: Lazarev.agency’s Accern Rhea case demonstrates hybrid GUI + prompt UX, report-builder mode, and a split-screen research workflow designed around real users.

The best usability testing companies to hire

Lazarev.agency

- Specialty: End-to-end UX with evaluative studies embedded into design, AI and data-heavy digital products.

- Best for: Teams that need UX research plus iterative user testing tied to roadmap outcomes.

- Unique selling point: Research-to-design pipeline that produces actionable insights fast (e.g., clips, findings, and severity-ranked usability issues that translate into tickets).

- Notable advantage: Decision-ready deliverables and a research-to-design handoff that moves findings into prioritized tickets and interface updates within the same sprint cadence.

AnswerLab

- Specialty: Enterprise-grade moderated studies and live user interviews.

- Best for: Global programs that must recruit participants fast and handle executive stakeholders.

- Unique selling point: Depth in facilitation across regulated spaces.

- Notable advantage: Strong analysis discipline and templates that deliver results under tight timelines.

Nielsen Norman Group (NN/g)

- Specialty: Methodology canon, training, and heuristics used as industry standards.

- Best for: Teams formalizing testing process and scaling researcher craft.

- Unique selling point: Evidence-backed frameworks that de-risk decisions.

- Notable advantage: Education that raises the bar for moderators and observers.

Blink UX

- Specialty: Research-driven service and product design.

- Best for: Multi-touchpoint journeys where user experience evidence must influence operations.

- Unique selling point: Field context plus lab rigor.

- Notable advantage: Reports that make data driven decisions straightforward for non-research stakeholders.

MeasuringU

- Specialty: Benchmarking, SUS, and task-level metrics.

- Best for: Before/after comparisons and longitudinal improvement tracking.

- Unique selling point: Statistical depth on user satisfaction and efficiency.

- Notable advantage: Ready-made frameworks for quantitative user insights.

Baymard Institute

- Specialty: Ecommerce research and checkout usability.

- Best for: Conversion-critical flows on website and mobile app.

- Unique selling point: Pattern libraries validated by tests with real users.

- Notable advantage: Clear fixes for common usability issues.

Bold Insight

- Specialty: Regulated and safety-critical contexts.

- Best for: Medtech/automotive where compliance and participants safety matter.

- Unique selling point: Documentation and protocols aligned to standards.

- Notable advantage: Access to niche target users and right participants.

Applause

- Specialty: In-the-wild remote usability testing at scale.

- Best for: Device and locale coverage under tight timelines.

- Unique selling point: Massive tester user base for quick cycles.

- Notable advantage: Pressure-testing designs beyond the lab to spot usability issues early.

🔍 To turn this comparison into a confident choice, use the criteria outlined in hiring a design agency to identify which partner can actually deliver outcomes that matter.

The best usability testing platforms to use

UserTesting

- Specialty: On-demand user testing with recruiting baked in.

- Best for: Frequent directional checks between sprints, pricing models are transparent by tier.

- Unique selling point: Fast sourcing of participants and highlight reels.

- Notable advantage: Useful for early signal before heavier research.

Dscout

- Specialty: Diary studies that gather insights over days or weeks.

- Best for: Ecosystems where behaviors unfold over time.

- Unique selling point: Rich video entries from real users.

- Notable advantage: Complements task-based user testing with context.

Maze

- Specialty: Unmoderated prototype test and iterative testing.

- Best for: Fast concept checks in the design process.

- Unique selling point: Rapid, quantitative readouts for product development.

- Notable advantage: Speed without heavy ops, often cost effective.

Lookback

- Specialty: Remote moderated user testing with live observation.

- Best for: Distributed teams who still need nuance from user interviews.

- Unique selling point: Observer notes and timestamping.

- Notable advantage: Low friction for continuous studies across tools you already use.

Optimal Workshop

🔗https://www.optimalworkshop.com/

- Specialty: IA methods (card sorting, tree test).

- Best for: Navigation changes on website or mobile app.

- Unique selling point: Purpose-built IA suite that surfaces user behavior signals.

- Notable advantage: Outputs that guide structure for your target audience.

PlaybookUX

- Specialty: End-to-end user testing stack.

- Best for: Smaller teams standardizing their testing process.

- Unique selling point: Automation that keeps cadence.

- Notable advantage: Helps gather feedback and ship actionable feedback without adding headcount.

Userlytics

- Specialty: Global reach and device coverage.

- Best for: Products expanding to new customers across markets.

- Unique selling point: Broad panels + flexible in person or remote options.

- Notable advantage: Quick checks for localization and features fit.

What the best usability testing vendors offer

The strongest services combine method depth, clean ops, and deliverables that your designers can use the same day. This is where top usability testing vendors differentiate.

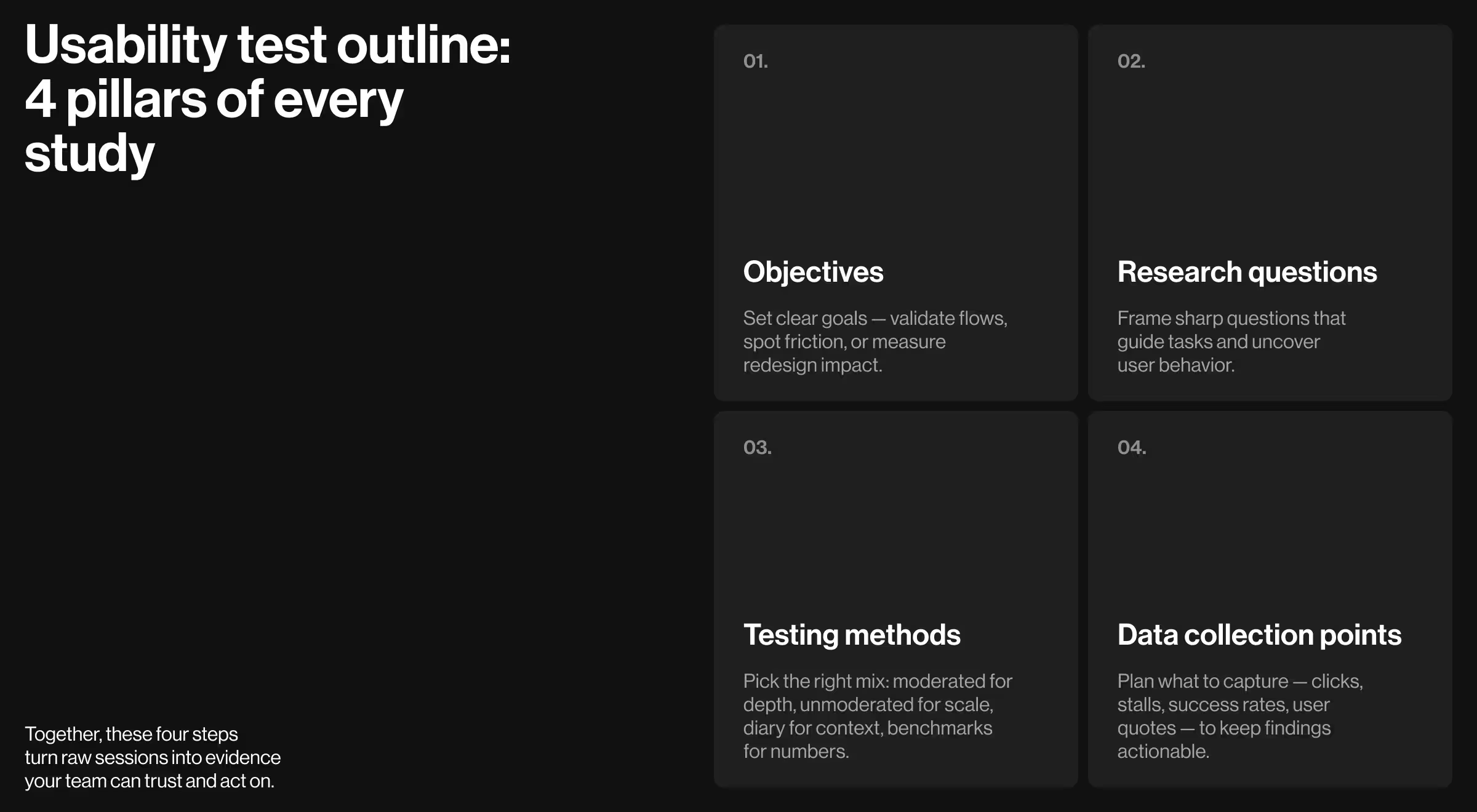

Usability test outline: the backbone of any study

Behind every strong usability test is a clear structure. Without one, even the best recruiting or facilitation won’t produce insights you can trust. The outline that top usability testing companies follow typically rests on four pillars:

- Objectives

Define what you want to accomplish. Is the goal to validate a new flow, uncover friction in onboarding, or measure the success of a website redesign? Clarity here keeps the study lean and relevant. - Research questions

Good usability testing isn’t about watching users click around. It’s about answering specific questions: Can users find the checkout button without guidance? Do they understand what each dashboard metric represents? Sharp questions direct the test and shape meaningful findings. - Testing methods

Choose the right method for the problem. Moderated interviews bring depth, unmoderated tests bring scale, diary studies bring context, and benchmarking adds numbers. The method must align with whether you need directional insights or confirmatory evidence. - Data collection points

Plan in advance what you’ll capture. Timestamps of stalls, heatmap clicks, task success rates, and user quotes should all map back to your objectives. A structured collection framework makes synthesis faster and keeps results decision-ready.

Together, these elements create a backbone for usability testing. They ensure that every session produces evidence tied to business goals. This is the framework the best usability testing vendors rely on to deliver insights your product team can act on immediately.

Deliverables you should expect

- Session recordings with red-flag clips and timestamps.

- Task outcomes (success/failure), completion times, and obstacles.

- Heatmaps or equivalents that visualize user behavior (where users click or hesitate).

- Accessibility observations and next steps.

- A single source of truth with prioritized usability issues, effort, and owners so teams can deliver results.

Methods used

- Moderated and unmoderated user testing, in person and remote.

- A/B or multivariate experiments when telemetry exists, heuristic reviews to shape tasks.

- Clear public guidance: facilitator scripts, moderator + note-taker model, method to match the question — directional vs confirmatory.

Advanced testing methodologies

For high-stakes flows, pair user testing with analytics reviews, intercepts, and benchmark studies. Distinguish scope: performance testing checks system speed, usability testing evaluates the user experience.

🔍 For website flows, structure quietly drives outcomes — see why information architecture is the UX superhero under cover.

Why outsourcing beats in-house

External teams recruit participants faster, spot blind spots, and synthesize user insights into actionable recommendations that feed iterative development. Moderation craft matters, avoiding leading questions and managing silence are learned skills.

How to compare usability testing vendors

Use this like-for-like scorepoints to evaluate website usability testing companies and platforms:

- Recruiting & sampling. Define target users; ensure screeners are tight for your target audience and coverage includes accessibility.

- Study design. Clear tasks, realistic context, and neutrality. Pilot to refine script; document scope and risks.

- Session capture. Screen, audio, observer notes. Secure storage for PII.

- Evidence package. Clips, heatmaps, and metrics. Severity-ranked backlog with effort.

- Synthesis. Turn findings into actionable insights that drive improvement in the next sprint.

- Cadence. Weekly or biweekly cycles for iterative testing.

- Ops & tools. Choose a platform stack that your team will actually use; keep pricing models transparent.

- Outcomes. Tie work to customer satisfaction, user satisfaction, and business metrics.

Why Lazarev.agency is the best choice — case study proof

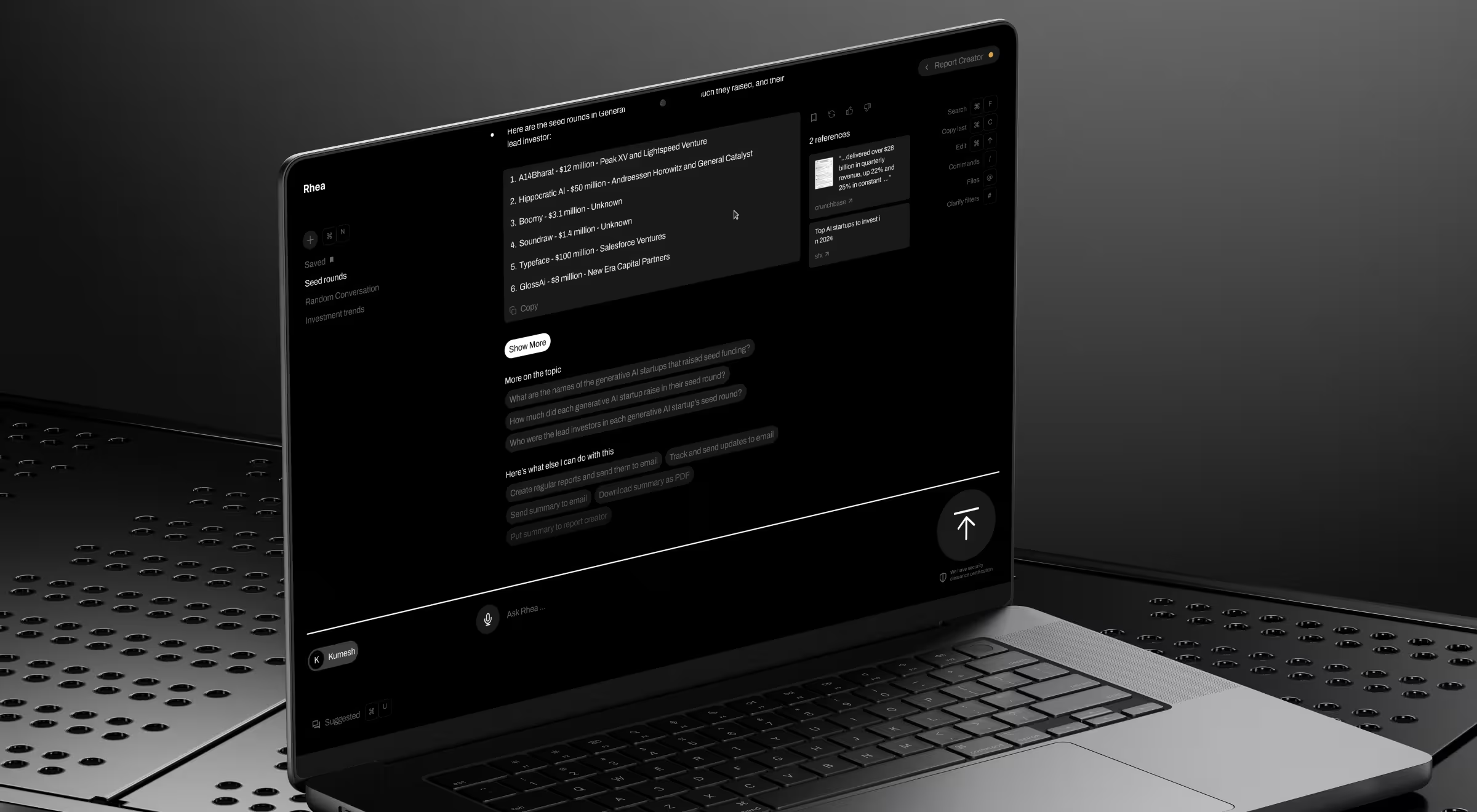

Accern, a leading NLP company in the U.S. and a Forbes Under 30 honoree, partnered with Lazarev.agency to build Rhea — a research tool for financial analysts, VC investors, and ESG specialists. The tool is powered by a pre-trained AI model that goes far beyond basic chatbot interactions.

In earlier work together, we created design patterns that set the standard and are now used by Anthropic, ChatAI, and other major AI players. This case shows our development of AI functionality that established a new market standard, now adopted by OpenAI and other industry leaders. It demonstrates why teams choose Lazarev.agency, an AI UX design agency, when the goal is research-driven, production-ready UX.

What we built for real researchers

- Hybrid interface beyond chat: dynamic widgets surface charts, tables, and references in response to prompts, letting analysts convert findings into report blocks without copy-paste.

- Split-screen workflow: research on the left, report assembly on the right — less context switching, faster product decisions.

- Report creator mode: a multi-purpose command field for search, alerts, and file actions within a single, focused surface.

Evidence and impact

The program contributed to a trajectory from Series B to acquisition, with $40M+ raised across the partnership. While funding outcomes depend on many factors, the case shows how researcher-centered UX creates a durable competitive edge.

Why it matters for testing

A research-first approach. Decisions grounded in observed behavior of real users drives clarity, efficiency, and features aligned with user needs.

🔎 Explore the full case: Accern.Rhea.

Getting started fast

Here’s a simple, time-boxed plan you can run next sprint.

- If you need to understand user behavior this quarter, pick one risky flow and run a one-sprint evaluative study.

- Mix 5–7 moderated sessions with remote testing for scale.

- Gather feedback, synthesize to a top-10 usability issues list, and feed design decisions for the next build.

- Repeat with a narrowed task set.

That’s how you turn research into actionable feedback and valuable insights without bloating scope.

Ready to de-risk your next release with tailored solutions and a rigorous testing process?

Explore our expert usability testing services!

Let’s talk about a one-sprint pilot that validates flows with real users and compiles a design-ready backlog.

.webp)